Share Traders Invest Billions on Signal. Telcos Invest in Noise

Quants have become the rockstars of modern share trading – extracting powerful signals from oceans of data at near real-time speed. Trading firms invest billions

We’re already seeing it. AI is slowly infiltrating telecom, one little project at a time… monitoring, automating, optimising.

In AI, we have one of the most disruptive opportunities of our lifetime, but the results of telco AI projects to date still feel eerily familiar.

Are most telcos using tomorrow’s tools with yesterday’s thinking? Maybe the real breakthroughs will not come from subtly refining the machine, but from breaking and rebuilding it in ways even the highly risk-averse telco industry can tolerate.

Today’s article poses a series of 5 contrarian questions for you. They ask you to consider how we might use AI to disrupt the telco industry whilst working within a system that’s been engineered to prevent disruption.

And what on earth do Rita Hayworth, Marilyn Monroe, and Raquel Welch have to do with it?

.

Telco has never been an industry that embraces disruption lightly. Reliability and predictability have shaped our culture for decades. These traits have produced amazing stability and scale (for the most part), but they have also carved deep grooves into how the industry thinks about new technology. When I said “grooves,” I actually meant a rut!

Incremental thinking becomes incremental culture. Small changes feel safer. Big ideas feel dangerous.

Nothing happens quickly, which is the structural disadvantage facing many telcos, as we discussed in our previous article.

Within this framing of stability and reliability, it’s no surprise then that AI is being used with a similar focus – small, iterative, low-risk improvements.

Most AI deployments in telco today automate, scale or optimise processes that already exist. Fault detection / response. Ticket handling. Customer segmentation. Workflow acceleration. Alternatively, they act as glue between systems or overcome functionality gaps in foundational OSS/BSS tools.

These are important, yet they are tightly anchored to the past. Like so much of our transformation thinking, they simply extend what we already have rather than helping us imagine something revolutionary instead. It’s AI as a wrapper (as opposed to AI rappers).

Many of the processes we are now optimising were never designed for algorithmic implementation, speed, agility or intelligence. In fact, many were designed explicitly to be human-led. Blindly using AI to automate legacy processes is kinda stupid when you think about it.

But we also need to be pragmatic too. It’s totally unrealistic to expect telco’s entrenched risk aversion to evaporate overnight. These are foundational pillars of their business models.

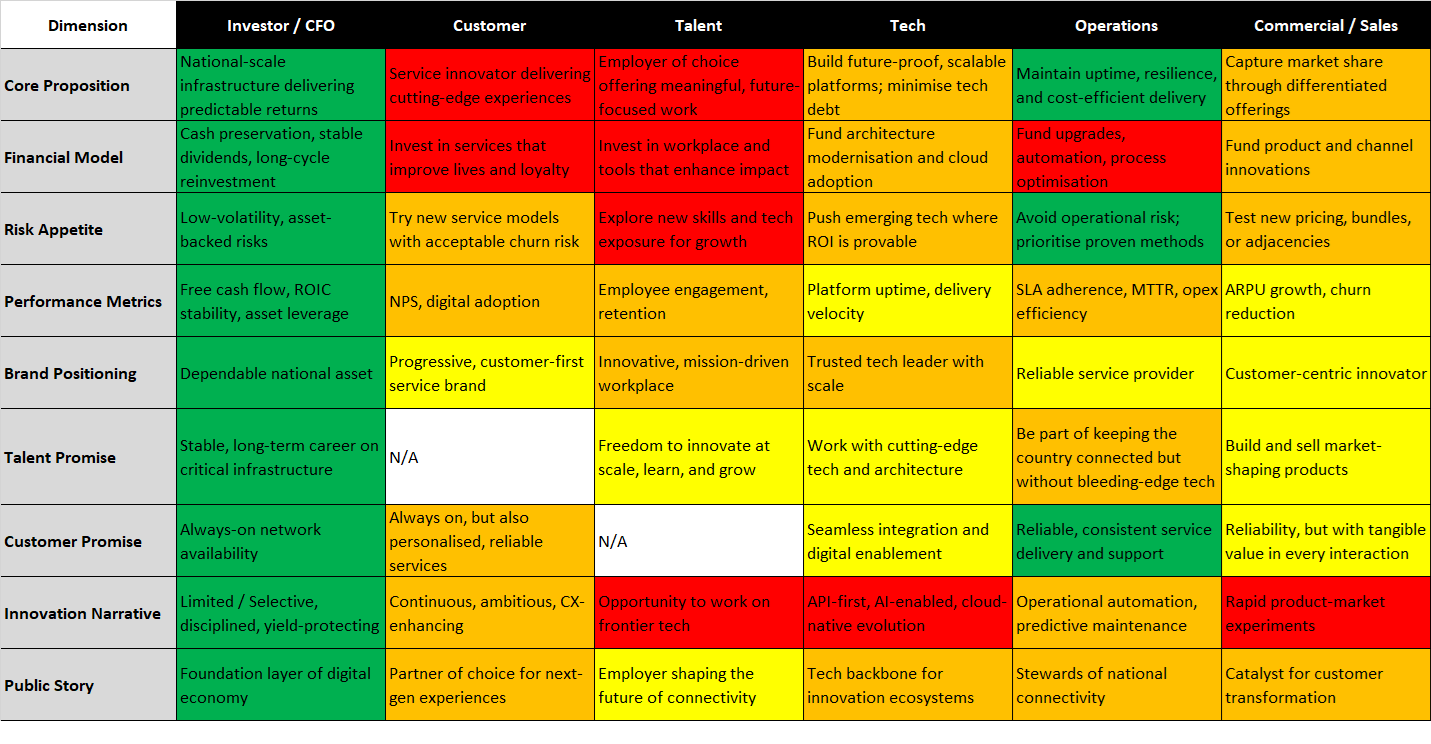

The industry is built on the promise of stability. This article about telco world views provides a view into the very human telco psyche. Check out the differences in Risk Appetite specifically.

But I’m not going to be defeatist! These constraints don’t mean our thinking must remain small.

The exact opposite. We have to use this disruptive technology to reinvent telco…. one step at a time.

The cool thing is we finally have a set of tools capable of helping us experiment safely and at scale (ie the potential for an explosion in the number of “one steps at a time” we can take in a short timeframe).

.

There is another possibility though.

Individual countries tend to have very high expectations of their local telcos. Regulators in each local jurisdiction watch them like a hawk. More importantly, outages make headlines.

That’s why the fear of outages makes experimentation feel so dangerous.

However, if we dig just one layer deeper. Experimentation feels dangerous because so many of our systems feel fragile. Not necessarily fragile in isolation, but definitely fragile as a collective.

Decades of layering, coupling, abstraction and patching have produced architectures that behave more like tightly tuned instruments than resilient ecosystems. A single change can ripple unpredictably across domains. Adjust one setting and another subsystem groans under the pressure.

The result is a kind of institutional fear. Like the pottery class fable where the quantity group outperforms the quality group for quality because they practise constantly, telco has become the opposite. We make so few changes that we never build the confidence to move fast.

Like helicopter parents, the more protective we are of change or growth, the more brittle our systems feel. The more brittle they feel, the less we want to change them. It becomes a self-reinforcing, self-fulfilling loop.

Another very real challenge is our lack of insight into how the complexity of our networks and systems behaves under stress. The complexity has proliferated so much that we never get even close to testing all the possible fail states. We tend to be good at intra-domain testing, but not so good at interop / inter-domain testing.

I’m taking a bit guess here, but I’d estimate our test coverage on inter-domain scenarios is probably less than 5% of the intra-domain testing scenarios. What do you reckon it would be?

.

Release the chaos Monkeys!

This is where chaos engineering becomes relevant. It’s a fascinating concept because it provides a disciplined way to introduce controlled failures, observe system responses and strengthen weak points. Chaos engineering builds confidence the same way athletic training builds muscle. You stress the system in safe conditions so you can trust it in real ones. The opposite of being helicopter parents.

If telco truly wants to use AI as a force for reinvention, it probably first needs to address its resilience confidence. AI can help us experiment faster, but only if the underlying environment is robust enough to handle a higher tempo of change.

.

The industry often talks about AI and automation as if they are interchangeable. They’re not.

Automation is about repeatability. It is about taking a known sequence of actions and executing them consistently, which isn’t always a strength of LLMs.

AI, at its best, is about coordination. It is about making sense of intent, interpreting context and adjusting behaviour based on emerging insight.

The Juggling Jugglers analogy captures this well. Automation is the individual juggler repeating a known pattern the same way over and over.

AI is the ringmaster managing many jugglers at once, ensuring the entire routine aligns to a larger objective.

But that’s not how telco thinks of AI today. We use AI for the automation tasks (jugglers) that we already understand (and have often developed rules engines for), rather than the coordination challenges (ringleaders) we struggle with.

AI can help us understand the millions of plausible variations that sit between the extremes of normal and pathological network behaviour. It can help us mimic and observe issues before they occur. It can highlight risks we did not know we were carrying. It can guide us towards better outcomes rather than simply performing tasks faster.

If we truly want transformation, we must shift our primary use of AI from execution to intelligence and intent (the higher-power optimisation).

.

Transformation is often portrayed as one dramatic leap. The big-bang cutover has been a part of telco culture for decades. Thinking out loud, it’s probably a big reason why telco has such a big risk/change-aversion!

In reality, most meaningful change emerges through a long series of small adjustments. Autonomous operations has already shown this. One automated fault scenario at a time. One use case. One narrow path to resolution. The system slowly becomes capable of handling nearly everything except the rarest events.

This is the AIOps Asymptote below, which we talk about here.

This is progress, but it’s also a case of focusing on treating the symptoms / maladies rather than diagnosing the cause and seeking a permanent cure.

AI creates an opportunity to move beyond this. It allows us to run many more experiments, far more quickly, with far closer scrutiny (observability).

But again, that is only possible if our environment is resilient enough to tolerate experimentation. We need system confidence before we can have experimentation confidence.

When that resilience is established, something powerful might just happen.

Micro-experiments begin to compound. Each small success removes a small piece of friction. Each small failure provides greater focus on what’s working.

Over time, we can implement a revolutionary transformation under the guise of hundreds of small shifts. A transformation of such proportion that the telco immune system would normally notice and fight off.

Instead, our strategy becomes like the posters of Rita Hayworth then Marilyn Monroe, and finally Raquel Welch in The Shawshank Redemption. A cover for the millions of tiny changes that AI has made, chipping away at the prison cell wall over a period of years without being noticed.

.

The uncomfortable truth is that most AI deployments today replicate legacy assumptions. Same decisions made, only quicker, not differently.

Game-changers think and behave differently.

Steph Curry didn’t break basketball’s rules. Yet you could argue that he re-invented how it was played.

He changed the geometry of the game within boundaries that kept the game recognisable.

Telco has similar constraints. Regulations. Standards. Interoperability. Safety. These aren’t going to simply evaporate in the face of a new technology like AI.

But just as Curry was the tool that caused a basketball revolution, AI gives us the perfect opportunity to totally re-invent the telco playbook.

.

#OSS #BSS #telco #telecommunications

PS. If you are interested in building more OSS/BSS/telco foundational context awareness, you might also like to take advantage of our Black Friday OSS/BSS Foundations Starter Kit. It contains two of our most important books (e-books) and an OSS foundations training course.

Quants have become the rockstars of modern share trading – extracting powerful signals from oceans of data at near real-time speed. Trading firms invest billions

One of the things I find incredibly interesting when I look at the Simplified TAM diagram below is that of each of the arrows indicating

The OSS/BSS vendor landscape just crossed another threshold. The Passionate About OSS Blue Book OSS/BSS Vendor Directory has now grown to over 750 listings, giving

Two goldfish are dropped into a new tank. One turns to the other and asks, “Do you know how to fire the cannon on this